Poseidon Merkle Trees in Hardware

We report the first fully-pipelined FPGA architecture for ZKP-friendly Merkle trees using the Poseidon hash

5/24/23

Irreducible Team

In a previous blog post, we discussed our modular, scalable, and fully-pipelined FPGA architecture for the number-theoretic transform. Here we continue our work in the acceleration of ZK proof-generation with the very first fully pipelined FPGA implementation of a Merkle tree construction, instantiated with the Poseidon hash function.

A Merkle tree is a classic cryptographic primitive which hashes a large sequence of items, and moreover makes possible small proofs that given particular items are included in the sequence. Merkle trees have been widely used throughout blockchain designs, beginning with Bitcoin’s, in which Merkle trees make possible cheaply verifiable proofs of transaction inclusion. They also arise as an essential primitive in several modern ZKP constructions, such as STARKs, Redshift, and Plonky2, and even in classic constructions dating back to 1992!

Constructing a Merkle tree over a large sequence boils down to computing a large number of hashes, mostly in parallel. For common hash function families like SHA-2, Keccak, and Blake2, this computation is very efficient on modern CPUs, which may even feature hash-specific instructions already accelerated by the processor. Many ZKPs in production now, however, use alternative hash functions designed for recursive ZKP verification, such as Poseidon, which is ~9x slower than Keccak on modern CPUs. The proof systems of Polygon zkEVM, Plonky2, and RISC Zero all generate Merkle trees using a Poseidon hash, and observe that this step constitutes a major performance bottleneck for the prover.

We present here the hardware architecture for a leaf-hashing engine and a branch-hashing engine, which together compute Merkle trees to the specification of the Plonky2 and Polygon zkEVM proof systems. We measure and report several metrics like latency, throughput, power consumption, energy efficiency and device utilization. By comparing our non-optimised version to one of the most efficient software benchmarking results, we achieved around 1.5x speedup improvement consuming only 138 W. Furthermore, we demonstrate how these modules can be combined with our previously presented low-degree extension (LDE) module to perform full, batched polynomial commitments in the hardware accelerator.

Background

Merkle Trees

Merkle trees (MT) are a type of tree where every node is labelled with a cryptographic hash. Any collision-resistant hash function may be used as the building block for labeling nodes. Merkle trees can be used to commit to a vector of data while allowing individual items to be verifiably revealed. The leaves of the tree are labelled with the hashes of the vector entries, and each non-leaf node is labelled with the hash of the concatenation of its children’s labels. The hash of the root node of the tree, known as the Merkle root, commits to the vector, in the sense that it is computationally infeasible to find a different vector whose Merkle tree shares the same root. A Merkle branch for a particular vector index is collection of child hashes of all ancestors of that entry in the tree. A Merkle branch suffices to convince any verifier of the data at that vector index.

When a Merkle tree is instantiated with an arity of 2—i.e., when all non-leaf nodes have exactly two children—it is called a binary Merkle tree. An inclusion proof, or branch, for a binary Merkle tree with \(n\) leaves consists of just \(\lceil \log_2 n \rceil - 1\) hash digests, and a verifier can check it with just \(\lceil \log_2 n \rceil\) hash evaluations. In this post we focus on the case of binary Merkle trees, because that is the structure used in Plonky2, though the architecture we present generalizes to higher-arity structures.

Merkle Trees in zk-STARKs

Certain cryptographic argument systems, notably zk-STARKs and Plonky2, depend on Merkle trees. This dependence arises from a well-known transformation of Interactive Oracle Proofs (IOPs) into Interactive Proofs. Consequently, the majority of the proof data for these protocols are simply Merkle branches, and the bulk of the verification time is spent checking them.

When the verification is performed on a CPU or in a smart contract, SHA-256 and Keccak are secure and efficient choices for the Merkle tree hash function. Many practical deployments of these proof systems, however, use the technique of recursive verification to achieve better tradeoffs between proving and verification costs. In the recursive setting, SHA-256 and Keccak incur high arithmetization costs. To mitigate the issue, several arithmetization-friendly hash functions have been developed, notably Poseidon and RescuePrime.

While significantly lowering the arithmetization costs, these arithmetizaion-friendly hash functions require substantial computational resources. For example, FPGA implementations of Keccak can be attained using a few thousand FPGA slices, orders of magnitude smaller than Poseidon. This stark difference mainly stems from Keccak’s 5-bit binary field S-Box, which fits into just a few FPGA look-up tables (LUTs); this contrasts with the finite field exponentiation required for Poseidon, as well as the the latter hash’s larger number of rounds. More recently, Poseidon v2 and Tip5 have emerged as supposedly more performant variants of the former two algorithms, respectively. However, as of the time of writing, it remains too early to consider switching, and Poseidon persists in the specifications of various state-of-the-art schemes, such as Plonky2. Moreover, investigation into the hardware performance of the two novel designs is needed.

In the remainder of this post, we focus on the Poseidon parameterization shared by Plonky2 and Polygon zkEVM. However, our findings and architectures apply to all MT constructions over large input datasets.

Merkle Hashing in Polygon zkEVM

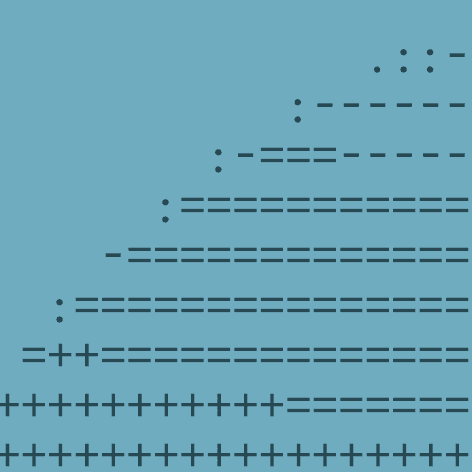

Given an execution trace matrix with \(r\)rows and \(c\) columns—where each column represents a polynomial with \(r\) coefficients—and LDE blowup factor \(b\), the largest MT is computed over the \(b \cdot r \cdot c\) elements of the LDE matrix in three steps:

index the rows in bit-reversed order,

hash every row (data block) to form MT leaves, and

hash MT branches into the MT root.

We depict the MT computation of the LDE matrix in Figure 1.

Fig. 1: Merkle tree computation of the LDE matrix.

In practice, \(b \cdot r \cdot c\) amounts to a substantial hash input volume. For example, Polygon zkEVM uses \(r = 2^{23}\) rows, \(c = 665\) columns, and blowup factor \(b = 2\) in just the first round of the STARK IOP, yielding 83 GB of data to be hashed into leaves. In theory, leaf-hashing can be done using \(b \cdot r\) parallel hashing engines. However, branch-hashing is sequential in the layers of the tree, requiring \(\frac{b \cdot r}{2} + \frac{b \cdot r}{4} + \ldots + 1 = b\cdot r - 1\) additional hash computations.

Leaf-Hashing Engine

We present a fully pipelined leaf-hashing engine (LHE) that sustains the input throughput using a single hashing core. Our design, depicted in Figure 2, is easily adapted to any hash primitive, under the following assumptions:

field element width is \(w\) bits,

hash function block input width is \(N \cdot w\), where \(N\) is a positive integer,

hash function state width is \(S \cdot w\), where \(S\) is a positive integer,

hash function digest width is \(D \cdot w\), where \(D\) is a positive integer, and

input streaming interface width at most \(N \cdot w\), otherwise more than one hashing core is needed.

Fig. 2: Generic leaf-hashing engine architecture.

Streaming one column of the LDE matrix, i.e., one polynomial, into the LHE takes \(\frac{b \cdot r}{N}\) cycles. Row-wise hashing can start when \(N\) columns are available in the LHE. HBM can be used to design large fully-pipelined transpose modules as shown in our previous NTT blog post.

The hash core hashes \(b \cdot r\) rows in a round-robin fashion, storing the intermediate \(S\)-element wide state in the HBM. As long as the \(b \cdot r\) product exceeds the hash module latency, full throughput can be attained. During the processing of the \(N\) final columns, LHE streams out \(b \cdot r\) of the \(D\)-element digests.

Notice that the \(b \cdot r\) product directly impacts the amount of memory required to store the interleaved states of subsequent hashes. However, any increase in the number of columns \(c\) does not increase the circuit complexity or the throughput, but rather induces only a linear increase in total computation latency. Therefore, scaling the trace matrix using additional columns linearly increases design latency, while imposing negligible impact on the throughput and resource utilization of this streaming architecture.

Branch-Hashing Engine

The branch-hashing engine (BHE) compresses LHE outputs into the MT root. Let LHE output digests \(d_{0, 0}, d_{0, 1}, \ldots, d_{0, b \cdot r - 1}\) in \(b \cdot r\) cycles. BHE can start hashing every 2 cycles, when pairs of depth-0 digests \((d_{0, 0}, d_{0, 1}), (d_{0, 2}, d_{0, 3}), \ldots, (d_{0, b \cdot r - 2}, d_{0, b \cdot r - 1})\) become available. Therefore computing depth-1 digests utilizes 50% of the hash core input bandwidth.

$$\begin{align*} d_{1, 0} &= \mathrm{hash}(d_{0, 0} \| d_{0, 1}) \\ d_{1, 1} &= \mathrm{hash}(d_{0, 2} \| d_{0, 3}) \\ \ldots \\ d_{1, \frac{b \cdot r}{2}-1} &= \mathrm{hash}(d_{0, b \cdot r - 2} \| d_{0, b \cdot r - 1}) \end{align*}$$

BHE does not wait for for the entire depth-0 MT layer to be computed. As soon as the first pair of depth-1 digests \((d_{1, 0}, d_{1, 1})\) is computed, BHE can compute depth-2 digest \(d_{2, 0} = \mathrm{hash}(d_{1, 0} \| d_{1, 1})\). Hence, a depth-2 digest is computed once every 4 cycles (offset by the latency of the hash core); i.e., depth-2 digests utilize 25% of the hash core input bandwidth. Since the number of digests in every subsequent layer is halved, required hash core utilization asymptotically approaches 100%. Therefore, a fully pipelined design is realized with just a single hash core.

This approach has four major benefits:

full-throughput branch hashing can be achieved using a single hash core,

memory requirement for storing MT layers is \(\mathcal{O}(\log b \cdot r)\), therefore it can easily fit into on-chip BRAM memory,

for sufficiently large \(b \cdot r\), latency of the hash core is hidden, and

it is trivial to choose a smaller stopping depth if MT caps are needed instead of the MT root, or to output digests of intermediate branch hashes.

We depict the generic BHE architecture in Figure 3.

Fig. 3: Generic branch-hashing engine architecture.

Merge blocks combine pairs of digests in the stream. Priority encoders ensure that digests in higher-depth layers are processed first and that shallow FIFOs do not overflow.

Notice that there is nothing specific to the underlying hash function in this architecture. This architecture can be applied to any other (tree) compression algorithm, with minimal changes.

Lastly, notice that LHE outputs \(b \cdot r\) digests once in every \(\lceil \frac{c}{N} \rceil \cdot b \cdot r\) cycles. Therefore, while LHE sustains its input throughput, the input throughput of the BHE is inherently \(2 \cdot \lceil \frac{c}{N} \rceil\) times lower. This inherent feature of MT accelerators allows serialized implementations of the BHE hash engine to keep up with the fully-pipelined LHE.

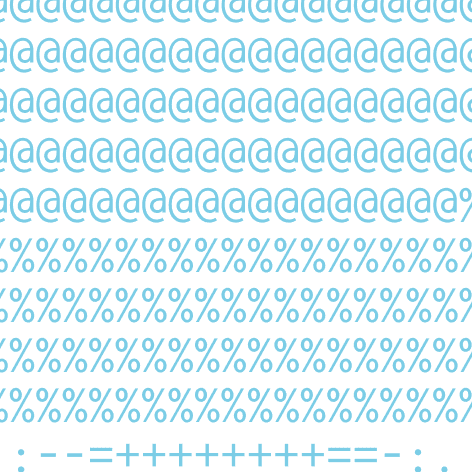

Poseidon Hash in Plonky2

The Poseidon hash is a cryptographic sponge function, iterating the Poseidon permutation \(pi\). The sponge state is divided into rate (R) and capacity (C) bits (see Figure 4). From the user's perspective, the sponge operates on R-sized blocks, which it absorbs into the R portion of the state, as it performs permutations on this state. In the squeezing phase ,a portion of the state is output as a digest. Sponges are variable-length output functions, whereby output sizes exceeding the state size can be attained, by means of additional permutations of the state.

Fig. 4: Overview of the Poseidon sponge function.

The Plonky2 Poseidon hash instance operates on 64-bit Goldilocks (GL64) elements (for a recap of GL64 arithmetic, see the NTT blog post). It uses 512-bit, 8-element, rate and 256-bit, 4-element, capacity, i.e., 12-element state. Poseidon permutation \(pi\) is a substitution–permutation network, devised with the wide-trail strategy in mind. Each round of the permutation consists of three layers:

the addition of round constants, which breaks symmetry between rounds,

the S-box layer, a non-linear mapping providing confusion, and

the maximum-distance separable (MDS) matrix multiplication, which provides diffusion.

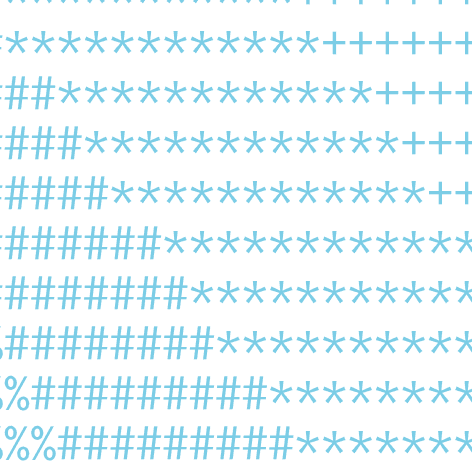

The Plonky2 Poseidon hash function employs 8 full and 22 partial rounds. The full round structure is depicted in Figure 5. Partial rounds replace all but one S-box with an identity function. We elaborate on the implementation aspects of the permutation in the following sections.

Fig. 5: Full round structure in Poseidon hash.

Round-Constant Addition

Round-constant addition requires a single GL64 modular adder. A total of 30 × 12 = 360 adders are required, each adding a different constant.

S-Box

The Plonky2 Poseidon hash S-Box is a mapping \(x \to x^7\) in the GL64 field. We implement the mapping using 2 modular squaring and 2 modular multiplication modules as shown below. A total of 118 S-Boxes are required, 8 × 12 for the full rounds, and 22 × 1 for the partial rounds.

$$\begin{align*}x_1 &= x \\x_2 &= x_1^2 \\x_4 &= x_2^2 \\x_3 &= x_1 \cdot x_2 \\x_7 &= x_3 \cdot x_4 \end{align*}$$

MDS Matrix Multiplication

The selected MDS matrix consists of low Hamming weight coefficients. The largest coefficient is \(41 \lt 2^6\). Thus it is feasible to implement each constant multiplication as a sum of shifted input values. We represent constants using non-adjacent form (NAF). For an \(n\)-bit constant, NAF representation requires on average \(n / 3\) shifted summands, instead of \(n / 2\) when unsigned representation is used.

Note that the Poseidon paper proposes an optimization that leverages the sparsity of S-Boxes in partial rounds, and results in a fewer constant multiplications in the MDS layers of these rounds. However, the modified constants are significantly larger, with larger Hamming weights. This makes shift-and-add multiplication prohibitively expensive and requires dedicated hardware multipliers. As a result, we find this method to be sub-optimal in hardware implementations.

Implementation Report

As previously discussed, LHE must leverage a fully-pipelined hash engine in order to keep up with the throughput of the input 512-bit AXIS interfaces clocked at 250 MHz. Throughout the rest of the blog, we call this engine LHE-S118, as it implements all 118x S-boxes in a pipeline fashion. We also implement BHE-S118 version accordingly. The LHE-S118 and BHE-S118 modules are implemented in separate FPGA devices which communicate over the 128 Gbps network using AMD/Xilinx’s Aurora protocol.

The full MT design, including decomposition of LHE-S118 and BHE-S118 into two separate FPGA devices, is illustrated in Figure 6.

Fig. 6: Full Merkle tree design implemented in two FPGA devices.

Measurement Setup

In order to perform the hardware tests, we implement the above LHE-S118 and BHE-S118 modules on two AMD/Xilinx U55C FPGA boards. Both FPGA boards are hosted inside our Gen1 server, with the following specifications:

Lenovo SR655 server

AMD 7313P 16C 3.0 GHz 150 W

512 GB TruDDR4 3200 MHz

6x PCIe Gen4 x16 slots

Redundant 1600 W PSU

6x AMD/Xilinx U55C FPGA cards

AMD/Xilinx VU47P FPGA

2x PCIe Gen4 x8

16 GB HBM

We measure and report the most important metrics like latency, throughput, power consumption, energy efficiency and device utilisation. For the latency, we measure each of the LHE-S118 and BHE-S118 modules separately (i.e., we implement each module in PCIe to PCIe configuration with 512-bit AXIS interfaces clocked at 250 MHz), whereas for throughput measurements we combine both of the modules together by using Aurora protocol (i.e., we implement the whole MT design in PCIe to Aurora to PCIe configuration with 512-bit AXIS interfaces clocked at 250 MHz).

Latency

In order to precisely analyze latency, we perform hardware timestamping within the FPGA, as presented in Figure 7. The timestamps are captured when input traffic hits the module T₀ and when the output traffic leaves it T₁. The latency values (T₁ – T₀) are stored in the control and status register (CSR) bank and the CPU can read them out after the each executed test.

Fig. 7: Overview of the timestamping mechanism in FPGA.

We report minimal (min), mean (avg), maximal (max) and median (med) latency in Table 1.

Table 1: Latency measurement results of LHE-S118 and BHE-S118 modules.

We discuss latency distributions for the implemented modules:

LHE-S118 exhibits constant latency distribution. This profile shows that our HBM stream controller architecture remedies the performance drops caused by HBM's physical properties such as refresh time.

BHE-S118 exhibits a narrow, 0.11 us wide, latency distribution caused by the latency variations attributed to the PCIe streaming. Unlike LHE, where a single hash follows every input word, BHE has to hash some of the remaining \(b \cdot r - 1\) branches after the final input is received.

Throughput

For the throughput we focused on measurements from the software perspective, as this is more realistic scenario for a ZKP acceleration in the production setup. Our software stack does its best effort to push as much data as possible via the PCIe to the FPGA to compute. For each MT root computation we stream via PCIe a matrix of 136 columns by 2²⁴ rows, amounting to 17 GB of data. While the theoretical throughput for the PCIe Gen4 x8 lanes is 128 Gbps, we hit a host-to-card throughput limitation imposed by the 128 MB L3 cache size in our x86 architecture host, as previously discussed in our NTT blog post. The MT input size is several orders of magnitude higher than the L3 cache size, which throttles the PCIe TX throughput down to 24.5 Gbps. We stress here that with additional effort the PCIe TX throughput for this particular computation can be optimized substantially; we will explore several techniques for alleviating this performance bottleneck in the Performance Analysis chapter.

The results are reported in Table 2.

Table 2: Throughput measurement results of the full Merkle tree design.

Note: MT/s in the table stands for Merkle tree root computations per second, whereas MH/s stands for the number of mega hashes per second.

Power Consumption

We measure the total power consumption of the server, and report the values that only specify the power consumption of the two FPGA devices running the LHE and BHE modules (reported figures, refer to Table 3, do not include the baseline power consumption of an idle server equal to 110 W). It is important to indicate that the standard PCIe slot power consumption is limited to 75 W and that we provide auxiliary power supply to the FPGA boards, which is required for more power-hungry hardware modules.

Table 3: Power consumption results of the two FPGA boards running MT design.

Energy Efficiency

Energy efficiency is the key performance metric since it defines the baseline costs of the MT hash, and associated ZKP computations. It combines computation throughput [MT/s] and power consumption [W]. Since the power/time [Ws] product is energy [J], the energy efficiency is often reported in [MT/J] units. Similar to the throughput results, we report the energy efficiency results for both [MT/J] and [MH/J] in Table 4.

Table 4: Energy efficiency results of the two FPGA boards running MT design.

Resource Utilization

The following resource utilization is reported per each module (see Table 5). The figures represent only the target design utilization and do not provide insights into additional resources used, such as the PCIe or Aurora interface hardware controllers.

Table 5: Resource utilization for LHE-118 and BHE-118 modules.

Performance Analysis

Our current solutions can perform the \(c = 130, b = 2, r = 2^{23}\) MT hash using Plonky2 Poseidon instance in 5.55 s, in a fully-pipelined fashion, consuming 138 W on average. We select these parameters as they correspond to the second round commitment operation in Polygon zkEVM (v0.7.0.0-rc.7-fork.1 release), noting that the MT inputs must be padded to 136 columns, the nearest multiple of the Poseidon rate.

Our LHE and BHE engines are underutilized, however, because of the lowered rate of the incoming data stream via PCIe. We estimate the best theoretical latency of our LHE and BHE engines combined is 1.27 s, assuming the 128 Gbps for PCIe Gen4 x8 TX stream instead of the 24.5 Gbps we attain right now. We explore several avenues for future improvements:

optimizing our software stack,

upgrading CPU to chip with more L3 cache,

upgrading to PCIe Gen4 x16 lanes streaming for theoretical data rate of 256 Gbps,

working on system integration whereby the LDE and LHE modules would be connected directly via Aurora network interface - a hardware to hardware communication protocol unhindered by the host CPU and PCIe protocol.

Moreover, we can employ multiple FPGAs to parallelize the task, e.g., 4 FPGAs for LHE and 2 FPGAs for BHE (as the BHE input throughput is order of magnitude smaller than LHE one we can do this asymmetrically). In this case, the best theoretical latency estimate for the entire MT root computation, based on the PCIe Gen4 x8 lanes protocol, would be 333 ms using our 6-FPGA Gen1 server.

We can benchmark our solution against the open-source Polygon zkEVM software implementation, which uses power-hungry AVX2 vectorized instructions. Running Polygon’s MERKLE_TREE_BENCH_AVX for \(c = 130, b = 2, r = 2^{23}\) on a 128 vCPU AWS c6i.metal instance measures the computation time at 8.49 s. In comparison, our current single-FPGA solution performs the same operation in 5.5 s, yielding a 1.53x speedup while consuming only 138 W.

Conclusion

We present a generic, fully-pipelined method to compute MT hashes. This is the first report on such an implementation, to the best of our knowledge. Large input data size, as well as the data access patterns make this a seemingly impossible feat. However, owing to the flexible and highly parallelized memory hierarchy of HBM-enabled FPGAs we are able to achieve this. Decentralization of memory accesses that HBM provides, as opposed to the DDR-based systems, opens new horizons for such applications, especially when integrations with full nodes comes into play.

We use this example to demonstrate our first multi-FPGA design, as well as the first step into exploration of multi-board computational clusters in the data-bound compute systems such are the ZKP. In this setting, both the generic LHE and BHE architectures can be applied on other computational problems involving cryptographic compression of large data sets in a tree-like manner.